Containerization has revolutionized modern software development, empowering developers, DevOps engineers, and IT professionals alike by enabling consistent, portable, and scalable deployments. Understanding tools such as Docker and Kubernetes is crucial for building efficient workflows. This comprehensive guide will not only introduce you to the fundamentals, benefits, and best practices of containerization but also provide real-world examples to help you begin confidently.

Getting Started with Containerization: Docker and Kubernetes

What is Containerization?

Containerization represents a revolutionary approach to software deployment that has fundamentally transformed how developers build, ship, and run applications. In contrast to traditional virtualization methods, containers offer a lightweight, portable solution that actively ensures consistent performance across all environments.

By effectively packaging applications with their dependencies into standardized units, containerization not only eliminates the common “it works on my machine” problem but also significantly enhances efficiency compared to virtual machines. Moreover, it streamlines development workflows, facilitates faster testing cycles, and simplifies deployment across diverse infrastructures. As a result, teams can deliver applications more reliably and scale them with greater agility.

Benefits of Containerization

- Consistency: Containers actively ensure that applications run consistently across various environments. As a result, they significantly reduce the notorious “it works on my machine” problem. By encapsulating all necessary dependencies, configurations, and libraries, containers enable seamless transitions from development to testing and production. Consequently, teams can deploy applications with greater confidence and fewer environment-specific issues.

- Isolation: Each container actively operates in isolation from others, thereby preventing conflicts between different applications and dependencies. By enforcing strict separation, containers ensure that one app’s library version, configuration, or runtime environment cannot interfere with another’s. Consequently, developers can run multiple services on the same host without risk of dependency clashes or environmental drift.

- Portability: Containers proactively encapsulate the application along with all its dependencies, thereby making it easy to move and run the same application across different systems. By bundling everything required to run the application, containers eliminate environment-specific issues and ensure consistent behavior. As a result, teams can deploy applications seamlessly across development, testing, and production environments without modification.

- Resource Efficiency: Containers share the host OS kernel, reducing the overhead of running multiple virtual machines.

- Fast Deployment: Containers start quickly and can be deployed in seconds, facilitating rapid scaling and updates.

Getting Started with Docker

Docker is a popular platform for developing, shipping, and running applications in containers.

- Installation: To begin working with Docker containers, you’ll first need to install Docker on your system. The installation process varies slightly depending on your operating system, but Docker provides comprehensive, platform-specific guides to walk you through each step.

- Docker Image: To containerize your application, you’ll first need to create a Docker image by defining its configuration in a Dockerfile. This process involves specifying all necessary dependencies, environment settings, and execution commands that your application requires to run properly.

- Containerization: Now that you’ve built your Docker image, the next step is to create and run a container from it. Keep in mind that while an image serves as a static blueprint, a container represents a live, isolated instance of that image in execution.

- Networking: To establish reliable and secure communication between containers, you must properly configure Docker’s networking features. By default, Docker provides several networking options that allow containers to interact while maintaining proper isolation.

- Data Management: When working with Docker containers, you must remember that by default, all files created inside a container are ephemeral meaning they disappear when the container stops or is removed. To prevent critical data loss, you should leverage Docker volumes, which provide persistent storage that exists independently of any container.

Introduction to Kubernetes

Kubernetes is an open-source container orchestration platform that automates the deployment, scaling, and management of containerized applications.

- Master and Nodes: Kubernetes has a master node that manages the cluster and worker nodes where containers run.

- Pods: The smallest deployable unit in Kubernetes is a pod, which can contain one or more containers that share the same network namespace.

- Deployments: Deployments define the desired state of a set of pods. Kubernetes ensures that the desired state is maintained, handling scaling, updates, and rollbacks.

- Services: Services provide a stable IP and DNS name for accessing pods. They enable load balancing and discovery of pods.

- Scaling: Kubernetes can automatically scale the number of pods based on defined criteria, ensuring optimal resource utilization.

Best Practices for Containerization

- Single Responsibility: Each container should have a single responsibility. For example, separate the application and database into different containers.

- Small Images: Keep Docker images as small as possible by only including necessary dependencies.

- Immutable Infrastructure: Treat containers and images as immutable. When an update is needed, create a new image and deploy it.

- Health Checks: Define health checks for your containers. Kubernetes can use these checks to determine the health of your application.

- Security: Apply security best practices, such as running containers with the least necessary privileges and regularly updating images to include security patches.

Real-World Examples

- Microservices: Containerization is often used to deploy microservices-based applications, where each microservice runs in its own container.

- Continuous Integration/Continuous Deployment (CI/CD): Containers simplify CI/CD pipelines by ensuring consistent environments for testing and deployment.

- Scalability: Kubernetes can automatically scale containers based on resource usage or incoming traffic.

- Hybrid Cloud: Containers provide a consistent deployment mechanism across different cloud providers and on-premises environments.

Conclusion

Containerization, facilitated by tools like Docker and Kubernetes, has revolutionized the way applications are developed, deployed, and managed. By embracing containerization, developers and operations teams can achieve greater flexibility, scalability, and efficiency in their software delivery pipeline.

We will manage / design your wix website

Rated 5.00 out of 5

We will manage / design your wix website

Rated 5.00 out of 5 Om Contact Form Pro

Rated 5.00 out of 5

Om Contact Form Pro

Rated 5.00 out of 5 NEW WAY

Rated 5.00 out of 5

NEW WAY

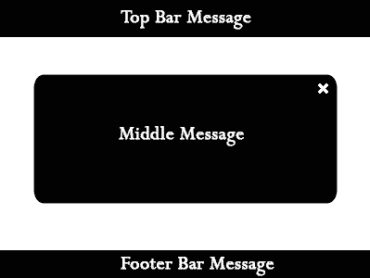

Rated 5.00 out of 5 Popup Shraddha Pro

Rated 5.00 out of 5

Popup Shraddha Pro

Rated 5.00 out of 5 Simana Royal Coming Soon Template

Rated 5.00 out of 5

Simana Royal Coming Soon Template

Rated 5.00 out of 5